Go HA

WebLogic provides many ways to automate lifecycle of cluster members to achieve server/service high availability without affecting service quality. For instance, we can configure whole server migration to make sure that in an event of physical machine failure cluster members hosted on that machine are started on another machine.

There are alternative methods to manage server (or process migration, at OS level), or simply, to control the lifecycle activity of a process. These alternative methods include inbuilt (or packaged) clustering software that comes with various flavors of Linux/Unix.

This post discusses the possible way to make such clustering software control WebLogic instances.

There are alternative methods to manage server (or process migration, at OS level), or simply, to control the lifecycle activity of a process. These alternative methods include inbuilt (or packaged) clustering software that comes with various flavors of Linux/Unix.

This post discusses the possible way to make such clustering software control WebLogic instances.

The central thing about this is the use of WebLogic Scripting Tool ( or WLST) script. This WLST script file would be invoked from service script (which is just a specific form of shell script used to execute lifecycle activities of a process) with appropriate argument, like start, stop, status. Script file has methods to interact with nodemnager to start, stop and check the server status. Also, the same WLST script contains logic for LSB compliance, and returns appropriate exit code to the calling service script. Calling service script has to return the same exit codes to the OS, and can also display further diagnostic messages if need be. This WLST script file would need environment variable to get hold of nodemnager and server details. Typically these environment variables would be set in service script, just before it invokes the WLST script. These variables are discussed in this document towards the end. If WebLogic domain is spanned over multiple physical servers, then we would have one instance of this WLST script file (in DOMAIN_HOME) per box. And of course, there has to be one instance of service script per WebLogic server instance on a physical box. (Note to wise: it is alright if you do not understand all this at this point, just follow the remaining post in a hope to understand this).

Here is what needs to be done:

0) Associate all the WebLogic server instances (including Admin Server) with machines and then configure nodemanagers for these machines. Here are the background details on nodemanager:

1. Make sure that you have an entry for the concerned domain in file:

NODEMANAGER_HOME/nodemanager.domains

2. Generate "userConfigFile" and "userKeyFile" so that we do not need to use clear-text username/password in the scripts responsible for starting/stopping/status-checking the WebLogic instances

Here are the steps:

a) First make sure that domain nodemanager credentials(username and password) for this domain are same as that of domain admin. This is so because if they are different then we would have to generate two config and two key files (one for connecting to Admin Server, and another for connecting to nodemanger – review the WLST script to see what I am talking about)

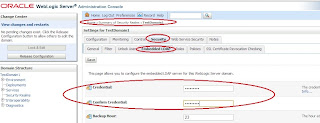

From, admin console, navigate to DOMAIN_NAME>>Security>>General>>Advanced.

Change values for "NodeManager Username:", "NodeManager Password:", and "Confirm NodeManager Password:". Set them to domain administrator credentials

Also, for each server consider setting:

- set Xms, Xmx, XX:MaxPermSize

This is just to make sure that we don’t use the default values (especially for permanent generation which is often less to effectively start the server in RUNNING mode).

b) start ssh terminal, connect to server machine and navigate to domain home. Once here, set the environment from “bin” directory:

/opt/Oracle/Middleware/user_projects/domains/TestDomain1/bin$ . ./setDomainEnv.sh

Now, start WLST using command:

java WebLogic.WLST

c) Once at WLST offline prompt, connect to the running AdminServer

d) Execute following command:

storeUserConfig()

For details on command “storeUserConfig “, refer: http://download.oracle.com/docs/cd/E17904_01/web.1111/e13813/reference.htm#i1064674

e) Also execute following command for each WebLogic instance that exists on this physical machine:

nmGenBootStartupProps(‘<SERVER_NAME>’)

f) execute following command to exit from WLST:

exit()

g) Move the two generated files (“root-WebLogicConfig.properties” and “root-WebLogicKey.properties”) into DOMAIN_HOME. Also, place the file “service_helper.py” into DOMAIN_HOME

3. Assuming we have one admin server (“AdminServer”) and one managed server (“MS1”), duplicate the file “Server” as “AdminServer” and “MS1” and edit both of them to set following properties:

export DOMAIN_HOME=/opt/Oracle/Middleware/user_projects/domains/TestDomain1

export DOMAIN_NAME=TestDomain1

export SERVER_NAME=AdminServer (or MS1)

export IS_ADMIN=TRUE (“FALSE” if SERVER_NAME is set to MS1)

export ADMIN_URL=t3://mac11.oracle.vm:7001

export NM_HOME=/opt/Oracle/Middleware/wlserver_10.3/common/nodemanager

export NM_HOST=mac11.oracle.vm

export NM_PORT=5556

export USER_CONFIG_FILE=$DOMAIN_HOME/root-WebLogicConfig.properties

export USER_KEY_FILE=$DOMAIN_HOME/root-WebLogicKey.properties

4. Now we simply install the files as service into “/etc/init.d”

5. use following commands to start the services initially:

service AdminSever start

service MS1 start

Note there is a dependence of MS1 on AdminServer. So start MS1 only when AdminServer starts up successfully

6. Repeat following steps for each physical machine where WebLogic domain is scattered:

2b-g, 3, 4, 5

7. Now configure the High Availability solution’s monitoring console (HA MC) to import these two services as LSB services. And from this point onwards, you should be good to control them using MC.

I tried this setup while installing WebLogic instances as service into /etc/init.d, and then working with these instances as LSB instances in DRBD Management Console Release: 0.9.9 on SLES 10, and things look good.

mac11:~ # uname -a

Linux mac11 2.6.16.60-0.54.5-default #1 Fri Sep 4 01:28:03 UTC 2009 x86_64 x86_64 x86_64 GNU/Linux

Since this setup involves checking the status, starting/stopping the servers using WLST and nodemanager these operations do take some time. So one piece of advice goes here; start, stop, status and monitor interval timouts need to be tuned to make things work. Also, if you are thinking of starting/shutting down the servers outside the HA MC, then you still would have to do this using methods that involve the use of nodemanager, otherwise the tracking of WebLogic instances (using nodemanager) would be lost, and things would break up.

Hope this helps you guys to go HA out there!

Hope this helps you guys to go HA out there!

Comments

Post a Comment